Abstract: According to the latest estimates, the human brain performs some 38 000 trillion operations per second. When you compare this to the amount of information that reaches conscious awareness, the disproportion becomes nothing short of remarkable. What are the consequences of this radical informatic asymmetry? The Blind Brain Theory of the Appearance of Consciousness (BBT) represents an attempt to 'explain away' several of the most perplexing features of consciousness in terms of information loss and depletion. The first-person perspective, it argues, is the expression of the kinds and quantities of information that, for a variety of structural and developmental reasons, cannot be accessed by the 'conscious brain.' Puzzles as profound and persistent as the now, personal identity, conscious unity, and most troubling of all, intentionality, could very well be kinds of illusions foisted on conscious awareness by different versions of the informatic limitation expressed, for instance, in the boundary of your visual field. By explaining away these phenomena, BTT separates the question of consciousness from the question of how consciousness appears, and so drastically narrows the so-called explanatory gap. If true, it considerably ‘softens’ the hard problem. But at what cost?**************************************************************************************************** *******************************************************************************************

How could they see anything else if they were prevented from moving their heads all their lives?

–Plato, The Republic

The time has come. What follows is a lightly-abridged version of the first third of the essay, the full form of which can be found here:

Spoiler Alert, click show to read:

Introduction: The Problematic Problem

How many puzzles whisper and cajole and actively seduce their would-be solvers? How many problems own the intellect that would overcome them?

Consciousness is the riddle that offers its own solutions. If consciousness as it appears is fundamentally deceptive, we are faced with the troubling possibility that we quite simply will not recognize the consciousness that science explains. It could be the case that the ‘facts of our deception’ will simply fall out of any correct theory of consciousness. But it could also be the case that a supplementary theory is required—a theory of the appearance of consciousness.

The central assumption of the present paper is that any final theory of consciousness will involve some account of multimodal neural information integration.1 Consciousness is the product of a Recursive System (RS) of some kind, an evolutionary twist that allows the human brain to factor its own operations into its environmental estimations and interventions. Thinking through the constraints faced by any such system, I will argue, provides a parsimonious way to understand why consciousness appears the way it does. The ability of the brain to ‘see itself’ is severely restricted. Once we appreciate the way limits on recursive information access are expressed in conscious experience, traditionally intractable first-person perspectival features such as the now, personal identity, and the unity of consciousness can be ‘explained away,’ thus closing, to some extent, the so-called explanatory gap.

The Blind Brain Theory of the Appearance of Consciousness (BBT) is an account of how an embedded, recursive information integration system might produce the peculiar structural characteristics we associate with the first-person perspective. In a sense, it argues that consciousness is so confusing because it literally is a kind of confusion. Our brain is almost entirely blind to itself, and it is this interval between ‘almost’ and ‘entirely’ wherein our experience of consciousness resides.

1. The differentiation and integration that so fundamentally characterize conscious awareness necessitate some system accessing multiple sources of information gleaned from the greater brain. This assumption presently motivates much of the work in consciousness research, including Tononi’s Information Integration Theory of Consciousness (2012) and Edelman’s Dynamic Core Hypothesis (2005). The RS as proposed here is an idealization meant to draw out structural consequences perhaps belonging to any such system.The Facts of Informatic Assymetry

There can be little doubt that the ‘self-conscious brain’ is myopic in the extreme when it comes to the greater brain. Profound informatic asymmetry characterizes the relationship between the brain and human consciousness, a dramatic quantitative disproportion between the information actually processed by the brain and the information that finds its way to consciousness. Short of isolating the dynamic processes of consciousness within the greater brain, we really have no reliable way to quantify the amount of information that makes it to consciousness. Inspired by cybernetics and information theory, a number of researchers made attempts in the 1950's and early 1960's, arriving at numbers that range from less than 3 to no more than 50 bits per second–almost preposterously low (Norretranders, 1999). More recent research on attentional capacity, though not concerned with quantifying ‘mental workload’ in information theoretic terms, seems to confirm these early findings (Marois and Ivanoff, 2006). Assuming that this research only reflects one aspect of the overall ‘bandwidth of consciousness,’ we can still presume that whatever number researchers ultimately derive will be surprisingly low. Either way, the gulf between the 7 numbers we can generally keep in our working memory and the estimated 38 000 trillion operations per second (38 petaflops) equivalent processing power (Greenemeier, 2009) possessed by the average human brain is boggling to say the least.3

[...]

We generally don’t possess the information we think we do!

[...]

One need only ask, What is your brain doing now? to appreciate the vertiginous extent of informatic asymmetry.

[...]

At some point in our recent evolutionary past, perhaps coeval with the development of language,4 the human brain became more and more recursive, which is to say, more and more able to factor its own processes into its environmental interventions. Many different evolutionary fables may be told here, but the important thing (to stipulate at the very least) is that some twist of recursive information integration, by degrees or by leaps, led to human consciousness. Somehow, the brain developed the capacity to ‘see itself,’ more or less.

It is important to realize the peculiarity of the system we’re discussing here. The RS qua neural information processor is ‘open’ insofar as information passes through it the same as any other neural system. The RS qua ‘consciousness generator,’ however, is ‘closed,’ insofar as only recursively integrated information reaches conscious awareness. Given the developmental gradient of evolution, we can presume a gradual increase in capacity, with the selection of more comprehensive sourcing and greater processing power culminating in the consciousness we possess today.

There’s the issue of evolutionary youth, for one. Even if we were to date the beginning of modern consciousness as far back as, say, the development of hand-axes, that would only mean some 1.4 million years of evolutionary ‘tuning.’ By contrast, the brain’s ability to access and process external environmental information is the product of hundreds of millions of years of natural selection. In all likelihood, the RS is an assemblage of ‘kluges,’ the slapdash result of haphazard mutations that produced some kind of reproductive benefit (Marcus, 2008).

There’s its frame, for another. As far as informatic environments go, perhaps nothing known is more complicated than the human brain. Not only is it a mechanism with some 100 billion parts possessing trillions of interconnections, it continually rewires itself over time. The complexities involved are so astronomical that we literally cannot imagine the neural processes underwriting the comprehension of the word ‘imagine.’ Recently, the National Academy of Engineering named reverse-engineering the brain one of its Grand Challenges: the first step, engineer the supercomputational and nanotechnological tools required to even properly begin (National Academy of Engineering, 2011).

And then there’s its relation to its object. Where the brain, thanks to locomotion, possesses a variable relationship to its external environment, allowing it to selectively access information, the RS is quite literally hardwired to the greater, nonconscious brain. Its information access is a function of its structural integration, and is therefore fixed to the degree that its structure is fixed. The RS must transform its structure, in other words, to attenuate its access.

These three constraints–evolutionary contingency, frame complexity, and access invariance–actually paint a quite troubling picture. They sketch the portrait of an RS that is developmentally gerrymandered, informatically overmatched, and structurally imprisoned–the portrait of a human brain that likely possesses only the merest glimpse of its inner workings. As preposterous as this might sound to some, it becomes more plausible the more cognitive psychology and neuroscience learns.

[...]

Most everyone, however, is inclined to think the potential for deception only goes so far--eliminativists included!6 The same evolutionary contingencies that constrain the RS, after all, also suggest the utility of the information it accesses. We have good reason to suppose that the information that makes it to consciousness is every bit as strategic as it is fragmental. We may only ‘see’ an absurd fraction of what is going on, but we can nevertheless assume that it’s the fraction that matters most...

Can’t we?

The problem lies in the dual, ‘open-closed’ structure of the RS. As a natural processor, the RS is an informatic crossroads, continuously accessing information from and feeding information to its greater neural environment. As a consciousness generator, however, the RS is an informatic island: only the information that is integrated finds its way to conscious experience. This means that the actual functions subserved by the RS within the greater brain—the way it finds itself ‘plugged in’—are no more accessible to consciousness than are the functions of the greater brain. And this suggests that consciousness likely suffers any number of profound and systematic misapprehensions.

This will be explored in far greater detail, but for the moment, it is important to appreciate the truly radical consequences of this, even if only as a possibility. Consider Daniel Wegner’s (2002) account of the ‘feeling of willing’ or volition. Given the information available to conscious cognition, we generally assume the function of volition is to ‘control behaviour.’ Volition seems to come first. We decide on a course of action, then we execute it. Wegner’s experiments, however, suggest what Nietzsche (1967) famously argued in the 19th century: that the ‘feeling of willing’ is post hoc. Arguing that volition as it appears is illusory, Wegner proposes that the actual function of volition is to take social ownership of behaviour.

As we shall see, this is precisely the kind of intuitive/experimental impasse we might expect given the structure of the RS. Since a closed moment of a far more extensive open circuit is all that underwrites the feeling of willing, we could simply be wrong. What is more, our intuition/assumption of ‘volition’ may have no utility whatsoever and yet ‘function’ perfectly well, simply because it remains systematically related to what the brain is actually doing.

The information integrated into consciousness (qua open) could be causally efficacious through and through and yet so functionally opaque (qua closed) that we can only ever be deluded by our attendant second-order assumptions. This means the argument for cognitive adequacy from evolutionary utility in no way discounts the problem that information asymmetry poses for consciousness. The question of whether brain makes use of the information it makes use of is trivial. The question is whether self-consciousness is massively deceived...

The RS is at once a neural cog, something that subserves larger functions, and an informatic bottleneck, the proximal source of every hunch, every intuition, we have regarding who we are and what we do. Its reliability as a source literally depends on its position as a cog. This suggests that all speculation on the ‘human’ faces what might be called the Positioning Problem, the question of how far consciousness can be trusted to understand itself.7 As we have seen, the radicality of information asymmetry, let alone the problems of evolutionary contingency, frame complexity, and access invariance, suggests that our straits could be quite dire. The Blind Brain Theory of the Appearance of Consciousness simply represents an attempt to think through this question of information and access in a principled way: to speculate on what our ‘conscious brain’ can and cannot see.

3. ‘Information,’ of course, is a notoriously nebulous concept. Rather than feign any definitive understanding, I will simply use the term in the brute sense of ‘systematic differences,’ and ‘processing’ as ‘systematic differences making systematic differences.’ The question of the semantics of these systematic differences has to be bracketed for reasons we shall soon see. The idea here is simply to get a certain theoretical gestalt off the ground.

4. Since language requires the human brain recursively access and translate its own information for vocal transmission, and since the limits of experience are also the limits of what can be spoken about, it seems unlikely that the development of language is not somehow related to the development of consciousness.

6. Even Paul Churchland (1989) eventually acknowledged the ‘epistemic merit’ of folk psychology—in a manner not so different than Dennett. BBT, as we shall see, charts a quite different course: by considering conscious cognition as something structurally open but reflectively closed to the cognitive activity of the greater brain, it raises the curious prospect (and nothing more) that ‘folk psychology’ or the ‘intentional stance’ as reflectively understood (as normative, intentional, etc.) is largely an artifact of reflection, and only seems to possess utility because it is reliably paired with inaccessible cognitive processes that are quite effective. It raises the possibility, in other words, that belief as consciously performed is quite distinct from belief as self-consciously described, which could very well suffer from what might be called ‘meta-recursive privation,’ a kind of ‘peep-hole view on a peep-hole view’ effect.

7. You could say the ‘positional reappraisal’ of experience and conscious cognition in the light of psychology and neuroscience is well underway. Keeping with our previous example, something like volition might be called a ‘tangled, truncated, compression heuristic.’ ‘Tangled,’ insofar as its actual function (to own behaviour post hoc) seems to differ from its intuitive function (to control behaviour). ‘Truncated,’ to the extent it turns on a kind of etiological anosognosia. ‘Compressed,’ given how little it provides in the way of phenomenal and/or cognitive information. And ‘heuristic’ insofar as it nevertheless seems to facilitate social cognition (though not in the way we think).Chained to the Magician: Encapsulation

One of the things that make consciousness so difficult to understand is intentionality. Where other phenomena simply ‘resist’ explanation, intentional phenomena seem to be intrinsically antagonistic to functional explanation. Like magic tricks, one cannot explain them without apparently explaining them away. As odd as it sounds, BBT proposes that we take this analogy to magic at its word. It presumes that intentionality and other ‘inexplicables’ of consciousness like presence, unity, and personal identity, are best understood as ‘magic tricks,’ artifacts of the way the RS is a prisoner of the greater, magician brain.

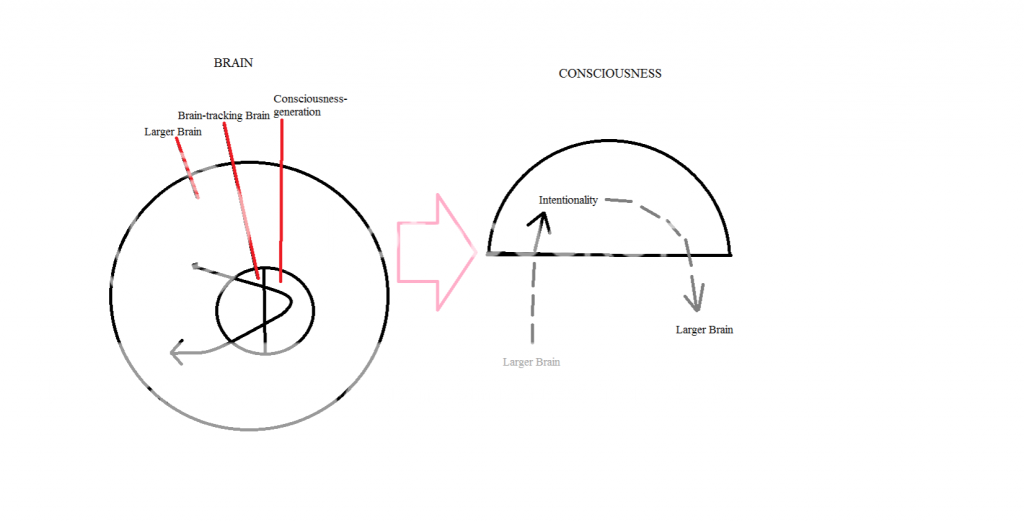

All magic tricks turn on what might be called information horizons: the magician literally leverages his illusions by manipulating what information you can and cannot access. The spectator is encapsulated, which is to say, stranded with information that appears sufficient. This gives us the structure schematically depicted in Fig. 1.

Fig. 1 In a magic act, the magician games the spectatorial information horizon to produce seemingly impossible effects, given the spectators’ existing expectations. Since each trick relies on convincing spectators they have all the information they need, a prior illusion of ‘sufficiency’ is required to leverage the subsequent trick.

Apparent sufficiency is all important, since the magician is trying to gull you into forming false expectations. The ‘sense of magic’ arises from the disjunct between these expectations and what actually happens. Without the facade of informatic sufficiency–that is to say, without encapsulation–the most the trick can do is surprise you. This is the reason why explaining magic tricks amounts to explaining away the ‘magic’: explanations provide the very information that must be sequestered to foil your expectations.

I once knew this magician who genuinely loved ad hoc, living-room performances.

[...]

I would eventually have a chance to watch him perform the very tricks that had boggled me earlier from over his shoulder. In other words, I was able to watch the identical process from an entirely different etiological perspective. It has struck me as a provocative analogy for consciousness ever since, especially the way the mental seems to ‘disappear’ when we look over the brain’s shoulder.

So how strong is the analogy? In both cases you have encapsulation: the RS has no more recursive access to ‘behind the scenes’ information than the brain gives it. In both cases the product–magic, consciousness–seems to vanish as soon as information regarding etiological provenance becomes available. The structure, as Fig.2 suggests, is quite similar.

Fig. 2 In consciousness we find a similar structure. But where the illusion of sufficiency is something the magician must bring about in a magic act, it simply follows in consciousness, given that it has no access whatsoever to any ‘behind the scenes.’ In this analogy, intentional phenomena are like magic to the extent that the absence of actual causal histories, ‘groundlessness,’ seems to be constitutive of the way they appear.

In this case we have multiple magicians, which is to say, any number of occluded etiologies. Now if the analogy holds, intentional phenomena, like magic, are something the brain can only cognize as such in the absence of the actual causal histories belonging to each. They require, in other words, the absence of certain kinds of information to make sense.

It’s worth noting, at this juncture, the way Fig. 2 in particular captures the ‘open-closed structure’ attributed to the RS. Given that some kind of integration of differentiated information is ultimately responsible for consciousness, this is precisely the ‘magical situation’ we should expect: a system that is at once open to the functional requirements of the greater brain, and yet closed by recursive availability. If the limits on recursive availability provide the informatic basis of our intentional concepts and intuitions, then the adequacy of those concepts and intuitions would depend on the adequacy of the information provided. Systematic deficits in the one, you can assume, would generate systematic deficits in the other.

[...]

There’s the consciousness we want to have, and then there’s the consciousness we have. The trick to finding the latter could very well turn on finding our way past the former.

[...]

The analogy warrants an honest look, at the very least. In what follows, I hope to show you a genuinely novel and systematically parsimonious way to interpret the first-person perspective, one that resolves many of its famous enigmas by treating them as a special kind of ‘magic’: something to be explained away. As it turns out, you are entirely what a roaming, recursive storm of information should look like—from the inside.The Unbounded Stage: Sufficiency

If the neural correlates of consciousness possess information horizons, how are they expressed in self-conscious experience?

Is it just a coincidence that the first-person perspective also possesses a horizonal structure?

We already know consciousness as it appears is an informatic illusion in some respects. We also know that consciousness only gets a ‘taste’ of the boggling complexities that make it possible. When we talk about consciousness and its neural correlates, we are talking about a dynamic subsystem that possesses a very specific informatic relationship with a greater system: one that is not simply profoundly asymmetrical, but asymmetrical in a structured way.

As informatically localized, the RS has to possess any number of information horizons, ‘integration thresholds,’ were the information we experience is taken up. To say that the conscious brain possesses ‘information horizons’ is merely to refer to the way the RS qua consciousness generator constitutes a closed system. When it comes to information, consciousness ‘gets what it gets.’

As trivial as this observation is, it is precisely where things become interesting. Why? Because if some form of recursive neural processing simply is consciousness, then we can presume encapsulation.9 If we can presume encapsulation, then we can presume the apparent sufficiency of information accessed. Since the insufficiency of accessed information will always be a matter of more information, sufficiency will be the perennial default. Not only does consciousness get what it gets, it gets everything to be gotten.

Why is default sufficiency important? For one, it suggests that neural information horizons will

express themselves in consciousness in a very peculiar way. Consider your visual field, the way seeing simply vanishes into some kind of asymptotic limit–a limit with one side. Somehow, our visual field is literally encircled by a blindness that we cannot see, leaving visual attention with the peculiar experience of experience running out. Unless we suppose that experience is utterly bricked in with neural correlates (which would commit us to asserting that we possess ‘vision-trailing-away-into-asymptotic-nothingness’ NCs), it seems obvious to suppose that the edge of the visual field is simply where the visual information available for conscious processing comes to an end.

The edge of our visual field is an example of what might be called asymptotic limits.

Fig. 3 The edge of our visual field provides a striking example of the way information horizons often find conscious expression as ‘asymptotic limits,’ intramodal boundaries that only possess one side. Given that we have no visual information pertaining to the limits of vision, the boundary of our visual field necessarily remains invisible. This structure, BBT suggests, is repeated throughout consciousness, and is responsible for a number of the profound structural features that render the first-person perspective so perplexing.

An asymptotic limit, to put it somewhat paradoxically, is a limit that cannot be depicted the way it’s depicted above. Fig. 3 represents the limit as informatically framed; it provides the very information that asymptotic limits sequester and so dispels default sufficiency.

Limits with one side don’t allow graphic representation of the kind attempted in Fig. 3 because of

the way these models shoehorn all the information into the visual mode. One might, for instance, object that asymptotic limits confront us all the time without, as in the case of magic, the attendant appearance of sufficiency. We see the horizon knowing full well the world outruns it. We scan the skies knowing full well the universe outruns the visible. Even when it comes to our visual field, we know that there’s always ‘more than what meets the eye.’ Nevertheless, seeing all there is to see at a given moment is what renders each of these limits asymptotic. We possess no visual information regarding the limits of our visual information. All this really means is that asymptotic limits and their attendant sufficiencies are mode specific. You could say our ability to informatically frame our visual field within memory, anticipation, and cognition is the only reason we can intuit its limitations at all. To paraphrase Glaucon from the epigraph, one has to see more to know there is more to see (Plato, 1987, p.317).

This complication of asymptotic limits and sufficiencies is precisely what we should expect,

given the integrative function of the RS. Say we parse some of the various information streams expressed in consciousness as depicted in Fig. 4.

Fig. 4 This graphic, as simple as it is, depicts various informatic modalities in a manner that bears information regarding their distinction. They are clearly bounded and positioned apart from one another. This is precisely the kind of information that, in all probability, would not be available to the RS, given the constraints considered above.

Each of these streams is discrete and disparately sourced prior to recursive integration. From the standpoint of recursive availability, however, we can look at each of these circles as ‘monopolistic spotlights,’ points where the information ‘lights up’ for conscious awareness. Given the unavailability of information pertaining to the spaces between the lights, we can assume they would not even exist for consciousness. Recursive availability, in other words, means these information streams would be ‘sutured,’ bound together as depicted in Fig. 5.10

Fig. 5 Given the asymptotic expression of informatic limits in conscious awareness, we might expect the discrete information streams depicted in Fig. 4 to appear to be ‘continuous’ from the standpoint of consciousness.

The local sufficiencies of each mode simply run into the sufficiencies of other modes forming a kind of ‘global complex’ of sufficiencies with their corresponding asymptotic limits. Once again, the outer boundary as depicted above needs to be considered heuristically: the ‘boundaries of consciousness’ do not possess any ‘far side.’ It’s not so much a matter of the sufficiency of the parts contributing to the sufficiency of the whole as it is a question of availability: absent any information regarding its global relation to its neural environment, that environment does not exist, not even as the ‘absence’ depicted above. Even though it is the integration of modalities that make the local limits of any one mode (such as vision) potentially available, there is a sense in which the global limit has to always outrun recursive availability. As strange as it sounds, consciousness is ‘amoebic.’ Whatever is integrated is encapsulated, and encapsulation means asymptotic limits and sufficiency. Given the open-closed structure of the RS, you might say that a kind of ‘asymptotic absolute’ has to afflict the whole, and with it, what might be called ‘persistent global sufficiency.11

So what we have, then, is a motley of local asymptotic limits and sufficiencies bound within a

global asymptotic limit and sufficiency. What we have, in other words, is an outline for something not so unlike consciousness as it appears to us. At this juncture, the important thing to note is the way it seems to simply fall out of the constraints on recursive integration. The suturing of the various information streams is not the accomplishment of any specialized neural device over and above the RS. The RS simply lacks information pertaining to their insufficiency. The same can be said of the asymptotic limit of the visual field: Why would we posit ‘neural correlates of vanishing vision’ when the simple absence of visual information is device enough?

[...]

Information horizons: The boundaries that delimit the recursive neural access that underwrites consciousness.

Encapsulation: The global result of limited recursive neural access, or information horizons.

Sufficiency: The way the lack of intra-modal access to information horizons renders a given modality of consciousness ‘sufficient,’ which is to say, at once all-inclusive and unbounded at any given moment.

Asymptotic limits: The way information horizons find phenomenal expression as ‘limits with one side.

We began by asking how information horizons might find phenomenal expression. What makes these concepts so interesting, I would argue, is the way they provide direct structural correlations between certain peculiarities of consciousness and possible facts of brain. They also show us that how what seem to be positive features of consciousness can arise without neural correlates to accomplish them. Once you accept that consciousness is the result of a special kind of informatically localized neural activity, information horizons and encapsulation directly follow. Sufficiency and asymptotic limits follow in turn, once you ask what information the conscious brain can and cannot access.

Moving on, I hope to show how these four concepts, along the open/closed structure of the RS, can explain some of the most baffling structural features of consciousness. By simply asking the question of what kinds of information the RS likely lacks, we can reconstruct the first-person, and show how the very things we find the most confusing about consciousness—and the most difficult to plug into our understanding of the natural world—are actually confusions.

9. This is not to be confused with ‘information encapsulation’ as used in Pylyshyn (1999) and debates regarding modularity. Metzinger’s account of ‘autoepistemic closure’ somewhat parallels what is meant by encapsulation here. As he writes, “‘autoepistemic closure’ is an epistemological and not (at least not primarily) a phenomenological concept. It refers to an ‘inbuilt blind spot,’ a structurally anchored deficit in the capacity to gain knowledge about oneself” (2003, p. 57). As an intentional concept embedded in a theoretical structure possessing many other intentional concepts, however, it utterly lacks the explanatory resources of encapsulation, which turns on a non-semantic concept of information.

10. As we shall see below, this has important consequences regarding the question of the unity of consciousness.

11. Thus the profound monotonicity of consciousness: As an encapsulated product of recursive availability, the availability of new information can never ‘switch the lights out’ on existing information.

Other quotes to help you understand (as this can be dense stuff):

Spoiler Alert, click show to read:The Difference Between Silence and Lies

Why evolve the computationally exhorbitant capacity to track ‘motives’ in our brain when simply making up even better motives is so much easier?

The Skyhook Theory

Since the mechanical complexities of brains so outrun the cognitive capacities of brains, managing brains (other’s or our own) requires a toolbox of very specialized tools, ‘fast and frugal’ heuristics that enable us to predict/explain/manipulate brains absent information regarding their mechanical complexities. What Dennett calls the ‘taking the intentional stance’ occurs whenever conditions trigger the application of these heuristics to some system in our environment.

[...]

As we saw above, BBT characterizes the intentional stance in mechanical terms, as the environmentally triggered application of heuristic devices adapted to solving social problem-ecologies.

The Crux

1) Cognition is heuristic all the way down.

2) Metacognition is continuous with cognition.

3) Metacognitive intuitions are the artifact of severe informatic and heuristic constraints. Metacognitive accuracy is impossible.

4) Metacognitive intuitions only loosely constrain neural fact. There are far more ways for neural facts to contradict our metacognitive intuitions than otherwise.

Godelling in the Valley

So BBT suggests that the ‘a priori’ is best construed as a kind of cognitive illusion, a consequence of the metacognitive opacity of those processes underwriting those ‘thoughts’ we are most inclined to call ‘analytic’ and ‘a priori.’ The necessity, abstraction, and internal relationality that seem to characterize these thoughts can all be understood in terms of information privation, the consequence of our metacognitive blindness to what our brain is actually doing when we engage in things like mathematical cognition. The idea is that our intuitive sense of what it is we think we’re doing when we do math—our ‘insights’ or ‘inferences,’ our ‘gists’ or ‘thoughts’—is fragmentary and deceptive, a drastically blinkered glimpse of astronomically complex, natural processes. The ‘a priori,’ on this view, characterizes the inscrutability, rather than the nature, of mathematical cognition.

[...]

On BBT, our various second-order theoretical interpretations of mathematics are chronically underdetermined for the same reason any theoretical interpretation in science is underdetermined: the lack of information. What dupes philosophers into transforming this obvious epistemic vice into a beguiling cognitive virtue is simply the fact that we also lack any information pertaining to the lack of this information. Since they have no inkling that their murky inklings involve ‘murkiness’ at all, they simply assume the sufficiency of those inklings.

BBT therefore predicts that the informational dividends of the neurocognitive revolution will revolutionize our understanding of mathematics. At some point we’ll conceive our mathematical intuitions as ‘low-dimensional shadows’ of far more complex processes that escape conscious cognition. Mathematics will come to be understood in terms of actual physical structures doing actual physical things to actual physical structures. And the historical practice of mathematics will be reconceptualized as a kind of inter-cranial computer science, as experiments in self-programming.

Now as strange as it might sound, you have to admit this makes an eerie kind of sense. Problems, after all, are posed and answers arise. No matter how fine we parse the steps, this is the way it seems to work: we ‘ponder,’ or input, problems, and solutions, outputs, arise via ‘insight,’ and successes are subsequently committed to ‘habit’ (so that the systematicities discovered seem to somehow exist ‘all at once’). This would certainly explain Hintikka’s ‘scandal of deduction,’ the fact that purported ‘analytic’ operations regularly provide us with genuinely novel information. And it decisively answers the question of what Wigner famously called the ‘unreasonable effectiveness’ of mathematical cognition: mathematics can so effectively solve nature—enable science—simply because mathematics is nature, a kind of cognitive Swiss Army Knife extraordinaire.

Cognitive Deficits Predicted by the Blind Brain Theory

Of course, all these cognitive deficits need to be ecologically qualified: mistaking the fact of the matter in a manner that economizes neurocomputational loads can generate cognitive efficiencies as well. The very neglect that renders heuristics inapplicable to the bulk of problem ecologies renders them that much more effective when it comes to the subset of problem ecologies they are adapted to. It’s not an all or nothing affair, and as I have found discussing the philosophical implications of these ‘cognitive illusions,’ the question of applicability is where the primary battleground lies.

[...]

ORIGINATION EFFECTS: Informatic neglect leads metacognition to intuit causal discontinuities between complex systems and their environments. The behaviour of the resulting systems seems to arise ‘ex nihilo’ and thus to be noncausally constrained, leading to metacognitive posits such as ‘rules,’ ‘reasons,’ ‘goals,’ ‘desires,’ and so forth.

ORIGINATION EFFECTS: Informatic neglect leads metacognition to intuit causal discontinuities between complex systems and their environments. The behaviour of the resulting systems seems to arise ‘ex nihilo’ and thus to be noncausally constrained, leading to metacognitive posits such as ‘rules,’ ‘reasons,’ ‘goals,’ ‘desires,’ and so forth.

ONLY-GAME-IN-TOWN EFFECTS: Since information inaccessible to our brain simply makes no difference to neurofunctionality, the lack of information pertaining to the insufficiency of information, generates the illusion of implicit sufficiency, the default assumption that the information available is all the information required. What Daniel Kahneman (2012) rather cumbersomely calls WYSIATI (or ‘What-You-See-Is-All-There-Is) in his work represents a special case of this effect.

[...]

SIMPLICITY/IDENTIFICATION EFFECTS: Informatic neglect leads us to intuit complexes as simples. The most basic experimental example of this is found in the psychophysical phenomena of ‘flicker fusion,’ the way oscillating lights and sounds will be perceived as continuous when the frequency passes beyond certain thresholds. The fact is, all of experience, cognitive or perceptual, is characterized by such ‘fusion illusions.’ In the absence of information–or difference making differences–we consciously experience and/or cognize identities, which is to say, mistake matter of fact heterogeneities for homogeneities. Still frames become ‘moving pictures.’ Ants on the sidewalk become spilled paint. A bottomless universe becomes a local celestial sphere. Whole cultures become cartoon caricatures. Brains become minds. And so on.

Attention All Attention Skeptics

Our brains are all but opaque to our brains, thanks to their astronomical complexity, among other things.

[...]

The task stance is the most economical way to conceive the experimental scene because it the most economical way to conceive human action. But why should either of those economies apply to the empirical question of attention?

[...]

BBT has, for quite some time now, had me looking at psychological experimentation mechanistically as kinds of information extracting meta-systems consisting of the regimented interactions of various subsystems, what we intuitively think of as ‘researchers’ and ‘subjects’ and ‘experimental apparatuses.’ As a result, I now generally look at intentional characterizations like Wu’s against this baseline, as information-neglecting heuristics, not so much accurate descriptions of what is going on as economical ways to navigate what is going on given certain problem contexts.

[...]

Memory isn’t an aviary. Reason isn’t a charioteer battling unruly moral and immoral horses. Odds are, attention isn’t a selective spotlight. We should expect fractionation, surprises–continuous complication. And even if you have faith in the theoretical accuracy of metacognition, the bottom line is you simply don’t know where those intuitions sit on the information food chain. Nothing need be accurate about our intuitions of brain function for the brain to function. Given this, using them to conceptually and operationally anchor an empirical research program smacks less of necessity than a leap of faith.

I believe I have hinted enough at these matters in my time here, so I'll leave it at that. I wonder how you all will respond?

Reply With Quote

Reply With Quote

me up in ways no human has yet imagined possible guys, I don't care!

me up in ways no human has yet imagined possible guys, I don't care!

#Hillary4prism

#Hillary4prism

Bookmarks